PART II: NOW

THE METHANE CATASTROPHE THAT MAY AWAIT

US TOMORROW

What happened at the end of the Permian is long, long ago, but

not far, far away. The catastrophic release of methane from the

seafloor is not just something which was long ago. It could --

unless we change our way of dealing with our planet, and change

it fast -- happen again tomorrow.

For most people, global warming -- the

gradual and almost imperceptible increase in the world's average

temperature -- is something that is only a dim and distant threat

far in the future...if even then. The amount of global warming

that most of us are likely to experience in our lifetimes is on

the order of a degree or so. Celsius or Fahrenheit (it happens

to be Celsius, in which scale each degree is 1.8 times that of

a Fahrenheit degree): it hardly matters. Such small temperatures

changes make no difference in the lives of most people. After

all, the variability of temperature over the course of a day --

which from where I write is about 17°C (30°F) -- usually

means nothing to us, and whether this spring happens to be a bit

warmer or cooler than last year is typically quite unnoticeable.

Moreover, in the affluent parts of the globe, it is easy to compensate

for such minor temperature differences: with more or less clothing,

with a bit more heat during a cold winter or a bit more air conditioning

during a hot summer. Just change the setting on the thermostat.

The fact that sea level is rising (though

not by much: during the 21st century sea level is expected to

rise less than 40 centimeters/16 inches, about 3/4 due to the

thermal expansion of water, and the rest due to glacier and icecap

melting: Raper and Braithwaite, 2006; though a new report suggests

that a rise of between 50 and 140 centimeters -- 20 to 56 inches

-- may be more probable: Rahmstorf, 2007), and that some areas

of the world will eventually be flooded, is known to the better-read

members of the population. But even for them, warming-induced

sea level changes seem remote. Yes, parts of New York City will

be flooded in a few centuries, along with parts of many other

coastal cities, but none living today will be around to witness

such flooding. Parts of Bangladesh will go under water, and some

island nations may disappear beneath the waves, but, again, these

events will occur in a distant future, to other people, in faraway

lands.

In addition to the indifference caused

by our ability to control our immediate environments, many people

have been apathetic about global warming because they have been

told that there is considerable disagreement among scientists

regarding whether there actually is warming, and, if so, exactly

how much is caused by -- and therefore can be controlled by --

human activities. Unfortunately, these people have been misled,

and, in most cases, deliberately misled. There are always disagreements

among scientists, especially those who are conscientious in their

endeavors. That is simply the way that science works. Disagreement

helps determine truth, because it is disagreement that drives

scientists to look for the evidence that will decide the issue.

Nature -- the "real world" -- is always the decision

maker.

Regarding global warming -- based on

the evidence -- the vast majority of climate scientists are in

agreement about three things:

(1) global warming is real, and will continue to increase,

(2) most global warming is due to human activity, specifically

the burning of carbon fuels, particularly fossil fuels (carbon

fuels include wood, peat, and charcoal in addition to the fossil

fuels: oil, natural gas, and coal), and

(3) as global warming continues, it will have increasingly adverse

effects on human beings, our environment, our fellow creatures,

and the global economy.

It does not seem worthwhile to review

all the enormous amount of evidence for global warming here. There

are many fine books and a constant stream of scientific papers

on the subject. Some of these papers occasionally attract enough

attention that their findings make the daily newspapers. A few

points, however, should be made.

First, the relation between atmospheric

levels of the greenhouse gas carbon dioxide and global temperatures

is clear. During the Phanerozoic (the last 543 million years),

when atmospheric carbon dioxide levels have been high, global

temperatures have been high; when low, global temperatures have

been low. The only exception to this correlation was in the Late

Ordovician (about 440 million years ago), when there was an ice

age despite apparently high levels of carbon dioxide. Other suspected

exceptions seem to have been based on the erroneous presumption

that certain fossils that help provide ancient temperature information

were not altered by conditions during and after their burial,

when, in fact, they were (Pearson, 2001; Schwarzchild, 2001).

The Ordovician exception has attracted further investigation.

But in the 440 million years since that time, there are no other

exceptions (for a review of their own evidence and that of others,

see Royer, 2004). High levels of atmospheric carbon dioxide increase

global temperatures (see Berner and Kothavala, 2001, diagram in

previous section) because carbon dioxide absorbs reflected infrared

radiation, and thus helps the Earth retain heat.

Second, the current warming is not, as

has been alleged, just part of the natural variation. The current

warming, rather, is quite exceptional. And third, it is due to

human activity. Scientists, who generally are by training (if

not by temperament as well) extremely cautious about reaching

conclusions, especially in controversial matters, used to be very

careful about attributing global warming to human activities.

With mounting evidence, that caution has dissipated. In the past

few years, the tone of scientific papers about climate change

has shifted. Now scientists refer to anthropogenic (human-caused)

global warming as a matter of course, and many seem quite (and

properly) concerned that their repeated warnings and calls for

remedial action are not being taken seriously enough.

A single graph provides a compelling

answer to those who still disbelieve the connection between human

activity atmospheric carbon dioxide and global temperatures. It

traces the amount of carbon dioxide in the atmosphere over a period

of 350,000 years, based on samples of ancient air bubbles caught

in the ice at the Vostok Station in Antarctica, the most physically

remote place on the surface of the planet. There, as snow slowly

accumulated over the ages (Antarctica is technically a desert,

because it receives very little precipitation), the snow also

trapped tiny bubbles of air. From ice cores obtained by drilling

through the Antarctic ice, scientists carefully extract that air,

and measure the percentage of carbon dioxide, and the isotopes

of oxygen. The oxygen isotopes can help reveal the temperatures

of long ago. Thus, from the same tiny bubble, scientists can know

both the amount of carbon dioxide in the air, as well as the temperature.

|

|

Atmospheric carbon dioxide levels

and global temperatures over the past 350,000 years. (kyr BP means thousands of years before present.)

The scale at the left refers to the green line, and indicates

the level of carbon dioxide in the atmosphere. Note that it varies

from about 200 to 300 parts per million by volume (ppmv).

Temperatures are indicated by the blue

scale at the right, which refers to the blue line. Temperature

variation over the past 350,000 years is about 11°C. (Temperature

here is measured by the Kelvin scale, in which each degree is

exactly the equivalent of a degree Celsius, or C. Each degree

Celsius equals 1.8 degrees Fahrenheit.)

Notice how temperature closely varies

with carbon dioxide level -- except at the extreme right. Here,

for emphasis, the green line for carbon dioxide has been changed

to red, to indicate the dramatic increase in atmospheric carbon

dioxide -- in just two centuries. That red line, incidentally,

has reached 380 ppmv as of mid-2005. (Rahmstorf, 2004)

|

The graph shows atmospheric carbon dioxide

(CO¸2) and global temperature rise and fall in lock-step

for these hundreds of thousands of years, right up until the present

(the last hundred and fifty years or so). Then, a vertical red

line, marked "anthropogenic CO¸2," abruptly --

and to those concerned about global warming, alarmingly -- spikes

upward. The blue line designating temperature as yet records no

change in response to this surge in atmospheric carbon dioxide

(Rahmstorf, 2004, figure 1), but it will.

(According to newer Antarctic ice core

studies, the same pattern holds back as far as 650,000 years.

Though the level of atmospheric carbon dioxide reached about 300

ppmv twice at times other than the present during that 650,000

year period, it certainly never exceeded that level until the

present, and is now at 380 ppmv: Siegenthaler, 2005; Brook, 2005.)

Though the red "anthropogenic CO¸2"

line spikes straight up, this reflects the fact that the graph

covers a period of more than a third of a million years; hence,

the graph compresses all detail into a vertical direction. This

is useful for comparing the rise and fall of carbon dioxide and

global temperature over long expanses of time, and in putting

the current spike in carbon dioxide in its proper long-term perspective,

but it does not provide insight into the annual changes in carbon

dioxide. A different graph, showing the increase in atmospheric

carbon dioxide over the past several decades, furnishes those

details.

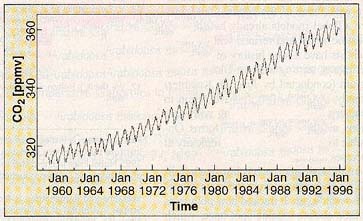

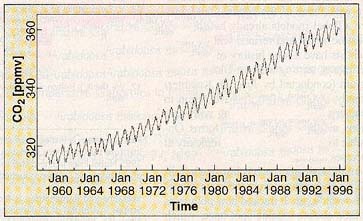

Recent increases in atmospheric

carbon dioxide. (Crutzen and

Ramanathan, 2000)

Recent increases in atmospheric

carbon dioxide. (Crutzen and

Ramanathan, 2000)

Four things should be noted about this

graph. First, it only records the changes in atmospheric carbon

dioxide over the past few decades. Anthropogenic global warming

has been happening at least since the beginning of the industrial

age, about 200 years ago, but scientists only have precise information

from about the past 40 years. Thus, while the graph begins at

less than 320 ppmv (parts per million by volume), the actual pre-industrial

average of atmospheric carbon dioxide is 220 ppmv, as averaged

over 420,000 years (Falkowski, 2000). (The immediate pre-industrial

level of carbon dioxide, from about 200 years ago, may have been

about 280 ppmv. This value is commonly employed in climate assessments.

Over the past 1000 years, this value has remained almost constant,

with a variation of no more than 10 ppmv.) This means that the

graph omits a major part of the anthropogenic carbon dioxide increase

over the past 200 years, but simply because we were not engaged

in the precision monitoring of carbon dioxide prior to about 40

years ago. As noted previously, atmospheric carbon dioxide has

now reached 380 ppmv.

|

Measuring Carbon

Dioxide

The amount of carbon dioxide in the atmosphere can be stated

in a number of different ways. The most common way is by indicating

the quantity of CO¸2 in ppmv (parts per million per volume).

The current (as of June, 2005) amount of CO¸2 is over 380

ppmv, meaning that if we took the entire atmosphere and divided

it into a million equal parts, CO¸2 would constitute 380

of those parts. This quantity could also have been expressed

as a percentage: 0.038%, but that is such a tiny percentage that

it seems insignificant. Working with such tiny percentages also

tends to cause errors, as when decimal points are misplaced,

so the ppmv value is used instead.

Sometimes scientists

employ weight in place of volume. In such cases, the designation

ppmw is used, indicating parts per million by weight. Weight

and volume measures are not identical (think of a cup of air

versus a cup of lead), so scientists need to specify which measuring

system they are using.

Another system is that

employed for carbon isotopes, as mentioned previously. This system

uses per mil, meaning parts per thousand. By using per mil rather

than percent (%, that is, parts per hundred), a typical negative

carbon isotope shift becomes 3 per mil, rather than 0.3%.

The per mil value is less likely to cause errors, and is easier

to understand and work with.

|

Second, the measurements for the graph

were taken at the Mauna Loa Observatory on Hawaii (by the recently

deceased Charles Keeling, who figured out how to measure atmospheric

carbon dioxide and is one of the discoverers of global warming).

The Observatory is at the summit of the volcanic peak, which rises

almost 3500 meters (more than two miles) above sea level. Mauna

Loa is an excellent place from which to monitor atmospheric concentrations

of carbon dioxide, as it is far from the most significant sources

of that gas; indeed, it is probably one of the best places in

the northern hemisphere from which to conduct such measurements.

(In fact, increased atmospheric carbon dioxide and global warming

were first detected there.) Third, the sawtooth pattern simply

reflects small seasonal variations in the general trend, and so

are unimportant. Fourth -- and quite important -- is that the

general trend of the line curves slightly upward with time (look

at the line from its lower left corner). This means that the problem

is getting worse from year to year, not getting better or merely

staying the same.

(The increase in the level of atmospheric

carbon dioxide may in part be accelerating due to global warming

itself. In the summer of 2003, Europe experienced one of the greatest

heat waves ever. Temperatures soared some 6°C [10.8°F]

above the average recorded since 1851, and rainfall was down 50%

from its long-term average [Baldocchi, 2005]. This caused a drop

in primary productivity -- plant growth -- of some 30%, and a

major increase in the release of carbon dioxide in the affected

region [Ciais, 2005].)

An additional graph looks at the relation

between atmospheric carbon dioxide and temperature for all ice-core

air samples from which we have data on both. (The data used in

this graph also comes from the ice cores of the Vostok station.)

|

| Relation between carbon

dioxide and global warming or cooling over the past 350,000 years. Each data point (from the Vostok, Antarctica,

ice cores) shows both a carbon dioxide measurement and one for

temperature (using oxygen isotope levels). The data points have

been divided into two groups, depending on whether the data was

taken from a time when glaciation was increasing (black points)

or decreasing (gray points). During cooling periods (glaciations),

both temperature and carbon dioxide drop; during warming periods

(deglaciations), both temperature and carbon dioxide rise. The

arrows indicate the general trends. (Falkowski, 2002) |

Each point on this graph displays the

information provided from one air sample, and each is color-coded

according to whether it came from a period of glaciation (black)

or deglaciation (gray) during the most recent part of the Ice

Age. Unlike the previous graph, which traces carbon dioxide and

temperature over time, this graph plots the level of carbon dioxide

against temperature, displaying how they have varied in relation

to one another. The arrows show what has happened: the Glaciations

arrow indicates that the fall of atmospheric carbon dioxide correlates

with the fall of global temperature. During Deglaciations, it

is the opposite: the rise of carbon dioxide is correlated with

the rise of global temperature.

There is a curious and particularly disturbing

aspect to this graph. That is the direction of the arrow labeled

"Modern." Although the arrows labeled Glaciation and

Deglaciation point in opposite directions, they still define the

same linear orientation, rather as a highway defines a particular

route, even though it goes in two different directions. (Like

the hands of a clock -- taking the top of the page as the 12 o'clock

direction -- they point in about the 2 o'clock and 8 o'clock directions.)

But the "Modern" arrow veers off on its own (in about

the 12:30 direction). It does so because carbon dioxide is rapidly

accumulating in the atmosphere.

Global temperatures, however, have not

caught up. They will, and the angle at which the "Modern"

arrow veers off will most likely, but gradually, come to define

the same trend as do the Glaciations and Deglaciations arrows.

(In other words, the arrow, like the hand of a clock, will slowly

rotate clockwise from its current 12:30 position towards 2 o'clock

as global temperatures increase.) Unfortunately, unless we severely

curb emissions from the burning of fossil fuels, that arrow will

reach to over 560 ppmv of carbon dioxide (double the pre-industrial

level) in the atmosphere before the end of this century.

Extended to 560 ppmv in the direction

currently indicated, the arrow projects global temperatures may

reach about 6.5°C higher than at present. That projection

is equivalent to some of the higher estimates of the amount the

planet will warm by the year 2100, so it does not seem unreasonable,

though many climate scientists believe that warming is likely

to be less. However, if the direction of the "Modern"

arrow does with time indeed slowly rotate clockwise, the planet

will be warmer, perhaps considerably warmer, than those estimates

now forecast.

Ocean acidification

In addition to warming the planet, increasing

atmospheric carbon dioxide will have a further major planetary

effect. Much of the fossil fuel carbon dioxide will enter the

ocean from the atmosphere. There it will acidify the ocean. Excess

carbon dioxide is now entering the ocean, from the atmosphere,

at the appalling rate of about a million metric tons per hour,

leading to decrease in ocean surface pH, that is, to an increase

in acidity, of about 0.1 pH (Cicerone, 2004). A negative 0.1 pH

change may not seem like much, but it represents a 30% increase

in acidity. By the end of this century, pH will have dropped by

another 0.2 to 0.4, possibly increasing acidity by more than 150%.

(Negative pH changes represent exponential [geometric] increases

in acidity rather than linear increases.) Organisms with calcium

carbonate skeletons will be hard pressed to survive.

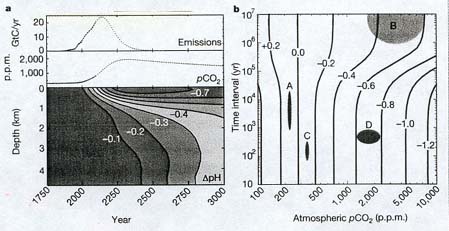

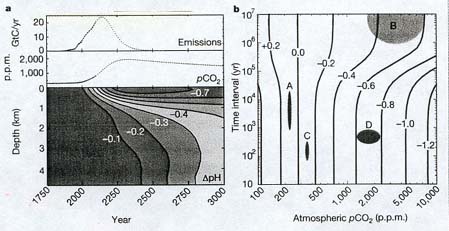

The future acidification of

the ocean due to the influx of carbon dioxide. Diagram a is composed of three parts. The topmost part

indicates the amount of carbon dioxide which will be emitted by

the continued burning of fossil fuels over the next few hundred

years (time scale is on bottom of diagram). Emissions peak about

2150, and thereafter decline as fossil fuels are used up. Atmospheric

carbon dioxide (pCO¸2), however, increases until

it reaches a maximum about year 2250, and then declines very slowly

(the middle part of Diagram a). The bottom part of the diagram

shows the changes in ocean acidity over the next thousand years,

according to ocean depth. Note that it is the surface ocean which

is most affected: the negative figures refer to the change in

pH, the measure of acidity. The more negative the number, the

greater the increase in acidity. Diagram

b helps put this coming acidification in perspective, by indicating

the change in ocean acidity over various time scales, from 10

years to 10 million (10^7) years. The blob labeled A shows the

range of ocean acidification during the Ice Age: it was actually

slightly more alkaline (less than 0.0) than today, and its variations

took place over periods from 1000 (10^3) to 10,000 (10^4) years.

Blob B shows the range of ocean acidity over the past 300 million

years: acidity varied by up to 0.6 pH units, but over a very

long period of time. Blob C indicates the minor acid changes over

historical time, in the past century or so. Blob D shows what

will happen to ocean acidity over the next centuries, assuming

we do not change our current pattern of fossil fuel consumption:

greater acidification (0.6 to 0.8 pH units) over a vastly

shorter period of time than occurred over the 300 million years

indicated by blob B. The basic message of Diagram b: our continuing

burning of fossil fuels will acidify the ocean more -- in just

a few centuries -- than it was acidified during the past third

of a billion years. Human activities will therefore cause the

demise of creatures with calcium carbonate or calcium phosphate

skeletons (corals, mollusks, coccoliths, fish, and lots of other

organisms), which will be unable to cope with the rapidly acidifying

conditions. (Caldeira and Wickett, 2003)

The future acidification of

the ocean due to the influx of carbon dioxide. Diagram a is composed of three parts. The topmost part

indicates the amount of carbon dioxide which will be emitted by

the continued burning of fossil fuels over the next few hundred

years (time scale is on bottom of diagram). Emissions peak about

2150, and thereafter decline as fossil fuels are used up. Atmospheric

carbon dioxide (pCO¸2), however, increases until

it reaches a maximum about year 2250, and then declines very slowly

(the middle part of Diagram a). The bottom part of the diagram

shows the changes in ocean acidity over the next thousand years,

according to ocean depth. Note that it is the surface ocean which

is most affected: the negative figures refer to the change in

pH, the measure of acidity. The more negative the number, the

greater the increase in acidity. Diagram

b helps put this coming acidification in perspective, by indicating

the change in ocean acidity over various time scales, from 10

years to 10 million (10^7) years. The blob labeled A shows the

range of ocean acidification during the Ice Age: it was actually

slightly more alkaline (less than 0.0) than today, and its variations

took place over periods from 1000 (10^3) to 10,000 (10^4) years.

Blob B shows the range of ocean acidity over the past 300 million

years: acidity varied by up to 0.6 pH units, but over a very

long period of time. Blob C indicates the minor acid changes over

historical time, in the past century or so. Blob D shows what

will happen to ocean acidity over the next centuries, assuming

we do not change our current pattern of fossil fuel consumption:

greater acidification (0.6 to 0.8 pH units) over a vastly

shorter period of time than occurred over the 300 million years

indicated by blob B. The basic message of Diagram b: our continuing

burning of fossil fuels will acidify the ocean more -- in just

a few centuries -- than it was acidified during the past third

of a billion years. Human activities will therefore cause the

demise of creatures with calcium carbonate or calcium phosphate

skeletons (corals, mollusks, coccoliths, fish, and lots of other

organisms), which will be unable to cope with the rapidly acidifying

conditions. (Caldeira and Wickett, 2003)

A detailed examination (Orr, 2005) of

the likely effects of ocean acidification notes that not only

will the world ocean become more acidic, but that the level of

carbonate ions (CO¸3^2) will also fall. Both of these

effects -- the increase in acidity and the decrease in carbonate

ions -- will be bad news for marine organisms with calcium carbonate

skeletons, many of which provide shelter and/or food for organisms

higher on the food chain, including human beings. Corals, for

example, offer shelter for numerous invertebrates and fish, and

are sometimes consumed by them. Pteropods, which are mollusks,

and therefore the distant relatives of oysters, clams, octopi,

and squids, are generally small, thin-shelled creatures that furnish

food for many fish, such as salmon, mackerel, herring, and cod.

They also are food for baleen whales (which strain water for food

particles). In polar waters, pteropods may be almost as important

as krill (shrimp-like crustaceans) in the diets of higher marine

organisms (Orr, 2005).

Both corals and pteropods, however, construct

their skeletons from aragonite, a less stable crystalline form

of calcium carbonate (CaCO¸3). This particular mineral is

more vulnerable to dissolution by acid than is calcite, the more

stable crystalline form. As the world ocean acidifies, coral and

pteropod skeletons will begin to dissolve, and growing corals

and pteropods will find it increasingly difficult to construct

their skeletons. This ecological threat will first appear in the

world's coldest waters, because cold water can hold more dissolved

carbon dioxide (just like cold soda, remember?)(Orr, 2005).

Two different scenarios for the coming

increase in atmospheric carbon dioxide suggest that the survival

prospects for cold water corals and pteropods in the 21st century

is not good. According to one "business-as-usual" scenario

(the IPCC's IS92a scenario) which postulates that human dumping

of carbon dioxide will continuously increase, atmospheric carbon

dioxide will rise to about 788 ppmv by the year 2100. The point

of doubling beyond pre-industrial carbon dioxide levels (560 ppmv),

therefore, will be reached around 2050. At this level of atmospheric

carbon dioxide, the Southern Ocean's surface waters will be "undersaturated"

with regard to dissolved aragonite. That means there will not

be enough aragonite available in surface waters for those organisms

which depend on it (Orr, 2005).

Organisms which construct their skeletons

from carbonate minerals other than aragonite will also suffer.

Coralline red algae and echinoderms (sea urchins, starfish), which

make their skeletons from calcite that contains magnesium, will

be affected even before corals and pteropods, because this form

of calcite is even more vulnerable to dissolution than aragonite.

Organisms which use relatively pure calcite, such as foraminifera

and coccolithophores, will fare better, but not for long: calcite

undersaturation will follow that of aragonite undersaturation

by 50 to 100 years (Orr, 2005). The dissolution of coccolithophore

skeletons (which do contain some magnesium) will cause not only

the demise of the coccolithophores, but what could be the beginning

of a significant drop in the level of atmospheric oxygen. Coccoliths

(the shorter version of the name) are photosynthetic organisms

which contribute a substantial amount of oxygen to the air.

Thus, by 2100, the situation will be

far worse: pH will have dropped by another 0.3 to 0.4 units (relative

to today's oceanic pH), representing a 100 to 150% increase in

ocean acidity. At that point, the entire Southern Ocean (not just

the surface waters), will be undersaturated with regard to aragonite.

The sub-polar Pacific Ocean will be similarly affected (Orr, 2005).

A second scenario (the IPCC's "stabilization"

scenario, S650) projects that acidification will take place more

slowly. It assumes that atmospheric carbon dioxide will rise less

rapidly, and level off at about 560 ppmv (twice pre-industrial)

at about 2100. Under this scenario, the same conditions will be

reached about 50 years later than with the "business-as-usual"

scenario (Orr, 2005).

Previous studies had indicated that the

process of increasing oceanic acidification would take centuries,

but these researchers find serious acidification will take only

decades (Orr, 2005). Disastrous as this probability is, however,

it just glimpses our near-future prospects, to about the end of

the twenty-first century. As the Caldeira and Wickett diagram

(above) indicates, acidification of the ocean will not arbitrarily

stop at the end of the present century, but will continue for

many centuries more. And all of these scenarios focus on carbon

dioxide, without attempting to include the possibly greater threat:

seafloor methane.

METHANE RELEASE; METHANE CATASTROPHE

With the warming of the planet will come

the release of hydrate methane. Already, the West Siberian Peat

Bog, containing perhaps a quarter of the world's inventory of

methane hydrate that is locked into continental permafrost, has

begun to melt and is releasing some of that store of methane.

The slight warming the Bering Sea and the northern coastal region

of Alaska has undergone presumably has started to free some of

its own hydrate methane. The current slow release, however, will

certainly accelerate as increased temperatures heat high latitude

continental regions, and warm ocean currents, disturbing marine

circulation patterns.

Even if hydrate methane release never

reaches a stage that can fully be described as catastrophic, it

could make the difference between quite serious and quite deadly

global warming. As time goes by, we can expect that methane will

make more and more of a contribution to what may become not mere

global warming but global scorching. But at some unpredictable

"tipping point," the accelerating release may become

overwhelming.

One way that such an accelerated release

could occur is via a major submarine landslide, which can happen

in a matter of hours, and which could trigger additional slumping

and further sudden methane release. The stability of the continental

margins in which methane hydrates reside is unclear: "It

is not known if future warming is sufficient to cause failure

of continental slopes on a global scale, but isotopic evidence

of rapid carbon release in the past is suggestive" (Buffett

and Archer, 2004).

Another release mechanism is by the venting

of vast quantities of free methane and dissociated hydrate methane

over a period of decades, perhaps triggered by marine warming

sufficient to allow the fracturing of seafloor sediments by the

free methane gas that underlies the hydrate. Either mechanism

has the potential to abruptly and massively release continental

margin methane within a short period of time, say, within a hundred

years, or a few centuries at most. These are events that can take

place in what is essentially a geological eyeblink, and will be

beyond our ability to prevent (except by ceasing to dump carbon

dioxide into the atmosphere), remedy, or mitigate.

At some point, that is, the release of

methane may indeed become catastrophic, with a surge of this powerful

greenhouse gas that could make the mere carbon dioxide warming

of the planet pale to insignificance. Although some scientists

(Harvey and Huang, 1995, for instance) believe that a powerful

release of methane may await us, but probably no sooner than thousands

of years in the future (if then), when ocean warming finally penetrates

to what was at the time (1995) thought to be standard hydrate

depth. Nonetheless, they recognized that such projections could

be in error if more rapid release mechanisms prevailed.

University of Chicago geophysicists David

Archer and Bruce Buffett, who also study what will happen with

the immense store of hydrate methane in the seafloor, have estimated

that some 85% of that inventory will be released with a 3°C

(5.4°F) warming. Their calculations indicate that there are

about 3000 billion metric tons (Gt) of hydrate and some 2000 billion

metric tons (Gt) of "bubbles" -- the free gas that underlies

the hydrate -- in the seafloor worldwide (Buffett and Archer,

2004).

Though this about half of the usual estimate

of 10,000 billion metric tons (Gt) for seafloor methane, Archer

notes that the oxygenation level of the ocean bottom is a significant

factor in determining how much methane may be contained within

the seafloor. If the deepest water of the Arctic Ocean is anoxic

--as it could be, because of its restricted circulation -- the

methane hydrate inventory would be greater (Archer, 2006). In

addition, the Buffett and Archer calculations of the quantity

of seafloor methane rely on measurements of carbon rain, the organic

matter dropping through the water column to the ocean floor. The

discovery of giant larvaceans, with their ability to send packages

of organic matter rapidly to the ocean floor, has, according to

the discoverers, been overlooked in previous estimates of carbon

rain, and led to underestimates of 50 to 100% (Robison, 2005).

While Buffett and Archer project a loss

of 85% of seafloor methane with a warming of 3°C, a more conservative

estimate finds that about 2000 billion metric tons (Gt) of methane

could be released with a seafloor temperature increase of 5°C

(9°F) (Hornbach, 2004). (That is one-fifth of the usual estimate

of 10,000 Gt of methane hydrate in the world's continental margins.)

That's more than 2 1/2 times the amount of carbon as in the atmosphere.

(Buffett and Archer's estimated release of 85% of their calculated

quantity of seafloor methane amounts to 4 1/4 the amount of carbon

in the atmosphere, or 8 1/2 times that carbon if seafloor methane

is 10,000 Gt.) The carbon in the atmosphere, moreover, is largely

in the form of carbon dioxide, which is a far less powerful greenhouse

gas than methane. Though this methane would quickly be oxidized

-- to carbon dioxide -- once it reached the atmosphere, even its

short-term presence would deliver a stunning jolt of heat to the

planet. The derivative carbon dioxide will maintain that heat

over a much longer term.

At the end of the Permian, carbon dioxide

was initially injected into the atmosphere by the Traps eruptions.

These eruptions were presumably episodic, spread out over hundreds

of thousands of years, interspersed with long periods of dormancy.

Nonetheless, at least one or more of these episodes was sufficient

to release vast quantities of seafloor methane. As noted previously,

the primary trigger for seafloor methane release was presumably

the direct heating of the PaleoArctic continental margin by Siberian

Traps volcanism, particularly by the emplacement of magmatic sills

within the seafloor sediments. No doubt, however, volcanic carbon

dioxide also played a role in warming the planet and releasing

hydrate methane.

But while not as dramatic, our own releases

of carbon dioxide from the burning of fossil fuels well exceed,

on average, those of the Siberian Traps. While not episodic, nor

as sudden, our releases are considerable, continuous, and increasing.

In no more than 300 years, virtually all of the accessible fossil

fuel carbon reservoir -- some 5000 billion metric tons (Gt) --

will have been transferred to the atmosphere, in the form of carbon

dioxide, if we continue to burn up our fossil fuels.

To put this carbon dioxide release into

perspective, it is roughly equivalent to the total amount of carbon

dioxide injected into the atmosphere by the Siberian Traps volcanism.

Estimating from several sources, total Siberian Traps CO¸2

may have been about 1/2 to 2 1/2 to 4 times the amount of CO¸2

that will be released from the burning of fossil fuels (estimates

based on Leavitt, 1982 and Gerlach and Graeber, 1985, as cited

by Beerling and Berner, 2002, and Javoy and Michaud, 1989, as

cited by Grard, 2005). Moreover, this colossal quantity of carbon

dioxide will not be released over some 900,000 ± 800,000

years, as it was at the end of the Permian (Renne and Basu, 1991),

but in the course of just three centuries. That amounts to an

anthropogenic rate of carbon dioxide release that is roughly tens

to perhaps thousands of times faster than the average rate of

the end-Permian.

|

The numbers:

Stating that the "anthropogenic

rate of carbon dioxide release , , , is roughly tens to perhaps

thousands of times faster than the average rate at the end of

the Permian" may seem to be an extraordinary claim, so here

are the numbers. The amount of fossil fuel carbon is about 5000

billion metric tons (Gt), equivalent to some 18.3 trillion metric

tons (Tt) of carbon dioxide. Released into the atmosphere over

the next 300 years, that is an average of about 61 billion metric

tons (Gt) of anthropogenic carbon dioxide per year.

The maximum estimated

volume of Siberian Traps extrusives is about 3 million cubic

kilometers (about 720,000 cubic miles). Divided by an estimated

length of Siberian Traps volcanism of some one million years,

the average volcanic extrusion rate is 3 cubic kilometers (about

0.72 cubic miles) per year. Leavitt, 1982, estimates that each

cubic kilometer of extruded basalt releases 3.5 million metric

tons (Mt) of carbon dioxide, for a total of 10.5 million metric

tons (Mt) of carbon dioxide as the average annual release rate

for Siberian Traps volcanism. Comparable total figures from Gerlach

and Graeber, 1985, and Javoy and Michaud, 1989, are 48 million

metric tons (Mt) per year, and 70.4 million metric tons (Mt)

per year, respectively.

The anthropogenic carbon

dioxide release rate is therefore about 6000 times faster than

the average Siberian Traps release rate indicated by Leavitt,

1250 times faster than that of Gerlach and Graeber, and 850 times

faster than that of Javoy and Michaud. If the duration of Siberian

Traps volcanism much shorter than a million years, as say on

the order of 100,000 years (the shortest duration estimated by

Renne and Basu, 1991), relative rates would be reduced by an

order of magnitude, to 600 times faster than the Leavitt estimate,

125 times faster than the Gerlach and Graeber estimate, and 85

times faster than the Javoy and Michaud estimate.

In examining these figures,

however, it is important to note that the Siberian Traps carbon

dioxide release rates are averages, and that large igneous province

volcanic eruptions are presumably highly episodic. Consequently,

actual carbon dioxide release rates are probably quite variable

over the course of the eruption's duration.

|

This enormous release of carbon dioxide

ought to be quite sufficient to warm air and ocean enough to liberate

a vast quantity of methane from its icy seafloor muds.

A massive methane release, and perhaps

a true methane catastrophe, is just around the corner, geologically

speaking -- as well as in human terms. Assuming we continue to

conduct business as usual, it is inevitable. When it will happen

cannot be predicted, but it will likely begin between about a

hundred and, at most, a thousand years from now. Once it starts

-- or even well before it starts -- it will be irreversible. Each

of these characteristics -- inevitability, magnitude, unpredictability,

and irreversibility -- requires further elaboration.

Inevitability

The release of seafloor methane is inevitable because we are pumping

unprecedented quantities of carbon dioxide into the atmosphere.

This carbon dioxide will warm the planet, and, in fact, is already

doing so. Though the amount of global warming thus far (that is,

in the twentieth century) is minimal -- only about 0.6°C (about

1°F), plus or minus 0.2°C -- the warming will significantly

increase during this, the twenty-first century. The most generally

accepted projections for global warming, those from the Third

Assessment Report of the UN-sponsored Intergovernmental Panel

on Climate Change (IPCC), indicates that the world's surface will

warm by about between 1.4°C and 5.8°C (2.5°F to 10.4°F)

by the end of this century (Kerr, 2001). As of the IPCC's 2007

Fourth Assessment Report, likely warming is projected at 1.1°C

and 6.4°C (2.0°F to 11.5°F), with warming very likely

between 1.8°C and 4.0°C (3.2°F to 7.2°F: IPCC

2007). Though this warming estimate represents the consensus thinking

of the approximately 2500 climate scientists worldwide, recent

warming estimates indicate that it may be too conservative.

Typically, climate scientists make their

projections of global warming by estimating the heating effect

of a doubling of atmospheric carbon dioxide, which has been expected

to occur by the end of the twenty-first century. (The amount of

warming that will take place as a result of a doubling of atmospheric

carbon dioxide is often referred to as "climate sensitivity,"

though this is not the precise meaning of the term: see Schlesinger

and Andronova, 2002.) Using a different and complex approach,

some scientists now believe that there could actually be somewhat

less warming than projected by the IPCC.

But these same scientists believe that

there is an even greater likelihood -- in fact, a much greater

likelihood -- that warming could considerably exceed the IPCC's

projection. According to their projections, warming by the end

of the century will likely range between 1.0°C and 9.3°C

(1.8°F to 16.7°F), with the upper estimate significantly

higher than that of the IPCC. The scientists who reached these

conclusions find them -- in a modest departure from the ordinarily

unemotional language of science -- "a disquieting result"

(Andronova and Schlesinger, 2001).

In January 2005, this disquieting result

was confirmed as a real possibility by the largest computer climate

simulation ever done. Employing computer time from almost 100,000

home computers, the study compiled the results from the climateprediction.net

experiment. (The

harnessing of huge amounts of home computer time has become a

standard activity in certain branches of science which require

such time for extremely complex calculations. Ordinary citizens

can make important contributions to science by the donation of

such unused home computer time. This endeavor is immensely valuable

for climate scientists, and readers are strongly encouraged to

assist. This project does not interfere with the ordinary use

of home computers. Details are furnished at the website, www.climateprediction.net)

With a doubling of atmospheric carbon

dioxide, the study found, the possible global warming (that is,

the "climate sensitivity") could range from 1.9°C

(3.4°F) to as much as 11.5°C (20.7°F). Nonetheless,

the study also found that the most likely temperature increase

would be about 3.4°C (6.1°F), just as the IPCC had (Stainforth,

2005). One of the study's co-authors, Robert Spicer, pointed out

that the highest temperatures in "recent" earth history

occurred some 100 million years ago (during the Cretaceous Period),

but that global temperatures at that time were probably only about

6°C (10.8°F) higher than today's (Connor, 2005). If the

highest likely temperatures projected by the climateprediction.net

study were to come to pass, they would be without precedent in

hundreds of millions of years, perhaps for the entire Phanerozoic

(Royer, 2004).

The disquieting findings of Andronova

and Schlesinger were echoed by Richard Alley of Pennsylvania State

University, Chair of the National Research Council's Committee

on Abrupt Climate Change, at the December 2001 meeting of the

American Geophysical Union. Alley, who was discussing his committee's

newly released report "Abrupt Climate Changes: Inevitable

Surprises," stated that significant global warming could

come much more rapidly than the IPCC projects. He warned that

global temperatures could rise 10°C (18°F) in just a short

time, "tripping the switch" towards abrupt climate change

in only a few decades (Showstack, 2001).

Nonetheless, the IPCC's 2007 Fourth Assessment Report pegs climate

sensitivity (here, the doubling of atmospheric carbon dioxide)

at between 2.0°C and 4.5°C (3.6°F to 8.1°F), with

its best estimate being 3.0°C (5.4°F). This is not very

different from another new estimate that puts climate sensitivity

at between 1.5°C and 6.2°C (2.7°F to 11.2°F),

with the most likely figure being 2.8°C (5.0°F). This

estimate is based on a comprehensive study of carbon dioxide levels

and presumed temperatures over the past 420 million years (Royer,

2007). However, because the weathering of silicate rocks draws

down atmospheric carbon dioxide and restores temperatures some

100,000 to 150,000 years after a carbon dioxide outburst, this

study may well overlook short, extreme spikes of temperature.

The warming that the planet has already

experienced is not restricted to the lower atmosphere, or to the

sea surface. Scientists used to be puzzled as to where the heat

was going, because there seemed to be more heat being produced

by global warming than could be accounted for, based on atmospheric

and sea surface measurements. This is a conundrum no longer. As

many scientists had previously suspected, the heat is going into

the oceans. But they were only able to suspect that the oceans

were taking up the heat because they lacked the ability to measure

it. That has changed.

Based on the systematic investigation

of millions of temperature records from various ocean depths worldwide,

it is now clear that the "excess heat" is indeed going

into the oceans. In fact, more than 90% of the heat from global

warming has gone into the ocean, with the remaining heat having

gone into the melting of polar region ice and mountain glaciers,

and the atmosphere (Levitus, 2000). The oceanic temperature increase

(0.06°C, or about 0.1°F) is minute -- only about a tenth

of the temperature rise in the atmosphere -- but it represents

an enormous amount of heat, because of the vast ability of the

ocean to hold heat. The Atlantic, Indian, and Pacific Oceans all

record the increase, and all indicate similar heat variations

with time, over a forty year period from 1955 to 1995. All oceans

show a similar increase trend (Levitus, 2000):

|

| Ocean warming, 1955-1995. The heat is measured in joules (J), but the

specific units are far less important than the general trends,

obvious in all oceans. The red lines and the red figures indicate

the approximate warming that has taken place, based on millions

of measurements. (Levitus, 2000) |

Perhaps most importantly, the warming

has penetrated to deeper parts of all oceans, at depths from 300

to 1000 meters (about 900 feet to 6/10ths of a mile), and in the

North Atlantic, even below the 1000 meter level. The total temperature

increase of 0.06°C is the average of temperature readings

down to 3000 meters (Levitus, 2000), emphasizing just how deeply

the warming has penetrated. Such a warming of the deep ocean in

such a short time was previously not thought possible. The North

Atlantic data are possibly the most startling, because that ocean

seems to be highly vulnerable to global warming, and most able

to impact climatic conditions on its periphery and worldwide,

because of its major role in driving global thermohaline circulation.

As a result of the warming, the ecology

of the North Atlantic seafloor may be changing. The population

of small (5 centimeters/2 inches long) marine creatures, the sea

cucumbers (holothurians, a large group of echinoderms and thus

the cousins of starfish, sand dollars, and sea urchins) has increased

dramatically. Their numbers have jumped more than a thousand-fold

since just 1996, an increase attributed to an influx of dead algae.

Though the cause of the population surge has yet to be determined,

climate change is a likely suspect (Krieger, 2004). Most likely,

warming sea surface temperatures in the North Atlantic have resulted

in a major die-off of plankton, furnishing the ocean-bottom-dwelling

sea cucumbers with an unexpected -- and unsustainable -- bounty

of food.

|

The meaning of catastrophe

Catastrophe is so frequently

used in ordinary discourse that it has lost most of its meaning.

Though people in the world's poorer countries often have experience

with catastrophic war, famine, infectious disease and floods

(as this is written, half of Dacca, Bangladesh, a city of 10

million, is currently under water from monsoon-related flooding,

as is 60% of this 140 million population country), most of the

populations of affluent countries have no experience with catastrophe.

What passes for catastrophe are often family tragedies, which

loom large for the affected individuals, but generally assume

no wider significance.

But there are real catastrophes

-- sometimes local, sometimes regional, sometimes global -- both

for human beings and the other inhabitants of the planet.

Here is one:

North of Scotland, washed

by the North Sea to the east and the North Atlantic to the west,

lie small archipelagos called the Orkney (about 70 islands) and

the Shetland Islands (about 100 islands). Only a few islands

of these, the Northern Isles, are inhabited, though during the

breeding season the islands' rocky cliffs host huge numbers of

seabirds -- guillemots (members of the auk family), Arctic terns

and Shetland kittiwakes (gull family), great skuas and Arctic

skuas, (skua family) -- birds not familiar to most Americans,

Europeans, Asians. These are subarctic birds, which generally

live far to the north of the most populated areas of the Northern

Hemisphere (though some of their relatives do live in more temperate

regions).

In recent years, according

to Seabird 2000, the bird count released a few months ago, more

than 220,000 pairs of these birds have been breeding in these

Scottish islands. But not this year. In 2004, this huge number

of birds produced virtually no young: at most, a few dozen chicks

in all. The breeding season has been a total, unprecedented failure

(McCarthy, 2004). As Subarctic and Arctic regions warm, the ultimate

survival of these birds may be at risk.

This disaster may be

the first ripple of the wave of climate change-induced extinction

that will engulf the planet. The ultimate cause of the breeding

failure most probably is the warming of the eastern North Atlantic,

which has pushed warm-water phytoplankton some 1000 kilometers

(600 miles) north -- about a 10° latitude shift -- in just

40 years (Beaugrand, 2002), and a temperature increase of about

2°C (3.6°F) in the North Sea over the past 20 (McCarthy,

2004). (Another source indicates the temperature rise was only

1°C over the past 40 years: Martin Edwards, cited by Proffitt,

2004b.) This warming has resulted in the northern movement of

the plankton that used to live in the Shetland/Orkney area, as

cold-water phytoplankton followed the retreating, cooler waters.

This movement deprived copepods, minute crustaceans which live

off the phytoplankton, of their primary food (Beaugrand, 2002).

The high mortality of

the local phytoplankton and copepods has resulted in a massive

die-off of sandeels, and young sandeels in particular. Sandeels

(often written as "sand eels"), as their name implies,

are small (adults from the various species range from about 20

to 35 centimeter/8 to 14 inch in length), elongated, eel-like

fish that prefer sandy seafloor environments, and burrow into

them when threatened. The sandeels are a major food source for

numerous other organisms.

Although at least one

scientist has proposed that the cause of the plummeting number

of sandeels may be an increase in the local population of herrings,

together with the fact that the North Sea is one of the most

overfished ocean areas of the world (Proffitt, 2004a), this seems

rather unlikely. The overfishing has been a persistent though

growing problem; the seabird reproduction failure is abruptly

new. In addition, the population explosion of sea cucumbers,

mentioned previously, confirms that the quantity of organic debris

reaching the seafloor has enormously increased. This debris presumably

consists of the remains of phytoplankton and copepods, whose

skyrocketing mortality is due to oceanic temperature change.

Dead phytoplankton and copepods may serve the dietary needs of

sea cucumbers, but the precipitous population decline of these

minute organisms has left the sandeels without sustinance.

Above the sandeels on

the food chain are larger fish, such as cod, whose numbers are

falling, marine mammals, and the birds, now too malnourished

to reproduce (McCarthy, 2004). The former food chain has been

replaced by a chain of starvation.

In the far north of

Britain, the great chain of being has been sundered.

[Postscript, 7/12/05,

updated 10/31/06: On the Northwest Coast of the United States

(northern California, Oregon, Washington), there has been a severe

decline in the amount of plankton, including copepods and krill,

in the spring and early summer of 2005 (Martin, 2005). Copepods

and krill are crustaceans, and (at up to 2.5 cm -- an inch --

in length) are among the largest of the zooplankton, which consume

the vegetative plankton (phytoplankton). Most fish depend on

the copepods and krill for their food supply, directly or indirectly.

Local and migratory seabirds depend on the fish, just as they

do in the north of Scotland. It is not surprising, therefore,

that seabirds have borne the brunt of this new food chain collapse,

as they did in the northeast Atlantic.

In the Farallon Islands,

about 40 km (25 miles) off San Francisco, seabird nesting has

plummeted. According to the Point Reyes Bird Observatory Director

of Marine Ecology Bill Sydeman, "We expect zero nesting

success" for the Cassin's auklets, a seabird which breeds

on the islands. "We've never seen anything like it"

(quoted in Martin, 2005). The 2005 Farallon Islands auklet breeding

failure was repeated in 2006. Despite thousands of nesting birds,

not a single auklet chick was hatched (Schwing, 2006). Biologists

believe this breeding failure is directly attributable to the

birds' poor nutrition. Other seabird groups have also been significantly

affected, a situation unprecedented in the thirty years of monitoring.

Further to the north, along the coast of British Columbia and

off Alaska, sea surface temperatures are the highest in fifty

years. Along the Oregon coast, these temperatures are 6°C

(11°F) higher than normal (Martin, 2005).

The plankton collapse

is attributed to a major slowdown of upwelling, in which cold

water carries nutrients up from the ocean bottom. Without the

influx of nutrients, phytoplankton fail to thrive and their numbers

are greatly reduced. This affects all organisms above them on

the food chain, including fish, seabirds, marine mammals, and

even Humpback and Blue whales (Martin, 2005). Because seabird

nesting is easily observed, its decline is an obvious sign of

serious trouble in the coastal environment. But the count of

certain salmon stocks are down as well -- by as much as a hundredfold

(Martin, 2005).]

|

The salinity of the Atlantic Ocean is

also changing (Curry, 2003). Over the four decades between the

1950s and the 1990s, water closer to the poles has become fresher,

and that of the tropics has become saltier. The more poleward

water has freshened because of increasing melting of the Greenland

and Antarctic ice caps and Arctic and Southern Ocean sea ice.

The increase in tropical water salinity is due to increased evaporation:

an additional two meters (yards) of water evaporated over the

four decades. This represents a five to ten percent rise in the

evaporation rate in just 40 years (Curry, 2003).

Both the poleward and tropical changes

are presumptively due to global warming. Indeed, between 50°S

(about the latitude of southern Argentina) and 60°N (the latitude

of the southern tip of Greenland), upper ocean temperatures in

the western Atlantic (near the Americas) have risen about 1.0°C

(1.8°F). An increase in equatorial precipitation near the

African coast, possibly also due to global warming, has also been

noted (Curry, 2003).

|

Hurricane Katrina and Global Warming

(The Saffir-Simpson Hurricane Scale

divides hurricanes into five categories, according to wind strength.

A tropical storm becomes a hurricane when its winds exceed 119

kilometers/74 miles per hour. Category 1 hurricanes have winds

from 119 to 153 kilometers/74-95 miles per hour; Category 2,

154-177 km/96-110 mph; Category 3, 178-209 km/111-130 mph; Category

4, 210-249 km/131-155 mph; Category 5, over 249 km/155 mph.)

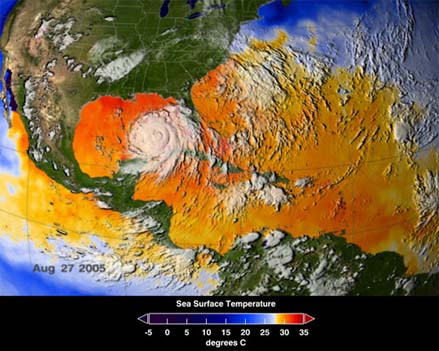

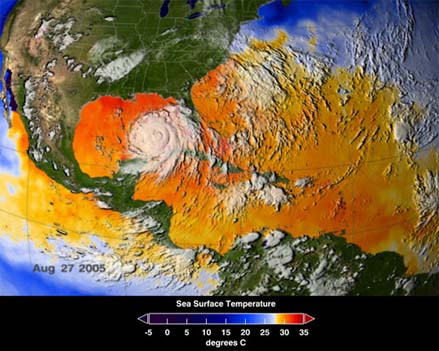

Hurricane Katrina as it approached

the Gulf Coast on August 28, 2005 (NOAA).

Hurricane Katrina as it approached

the Gulf Coast on August 28, 2005 (NOAA).

Hurricane Katrina crossed the southern

tip of the Florida peninsula on August 25, as a Category 1 hurricane,

and gathered strength to Category 5 during the next three days

in the Gulf of Mexico. It then lost some of that strength, to

Category 4, as it made landfall in Louisiana on August 29. Passage

to the north over land quickly dissipated its strength, so that

by August 30, it had become, once again, a tropical storm, just

as it had been on August 24 before hitting Florida.

Hurricane Katrina was a particularly

nasty hurricane, which dropped enough water into Louisiana's

Lake Pontchartrain to breach the levees surrounding the City

of New Orleans. Inadequate maintenance of these levees, together

with shoddy emergency preparations resulted in the flooding of

about 80% of the city, and major destruction along the nearby

Gulf Coast. The total cost of the hurricane is esttimated to

be between $125 and $150 billion dollars, making it the costliest

natural disaster in American history.

Shortly after this colossal natural

disaster, the Wall Street Journal ridiculed the idea that there

was any connection between Hurricane Katrina and global warming.

Let's examine the facts.

First, there is no way to attribute

any particular unusual weather event to a specific or single

source. One need only to consider the accuracy of television

and newspaper forecasts to recognize that if the causes and effects

of particular weather events were well understood, we would never

be caught without our umbrellas when it rains. The significant

inaccuracy of such forecasts, as well as their inability to project

more than a few days into the future, ought to convince us that

scientific weather prediction accuracy is still an elusive goal

(whatever the folks at the Old Farmers Almanac may think).

General patterns, however, are often

known to a fair degree of accuracy. A given area may typically

have about two dozen days each year when temperatures exceed

38°C (about 100°F). Over many years, this pattern may

hold. Nonetheless, there may be exceptional years when the number

of hot days is considerably greater than two dozen, or, indeed,

when there are no hot days at all. Careful study of weather patterns,

together with mathematical modeling, can even give us some sense

of how likely it may be for a given year to depart from the general

pattern. Both trends and cycles may also alter an established

pattern, and again, these departures from the initial pattern

may suspected or predicted.

One hurricane cycle has recently been

identified. According to the US National Atmospheric and Oceanic

Administration (NOAA), the number of hurricanes has been increasing

as part of a natural cycle. The cycle seems to run for forty

to sixty years; the number of hurricanes has been above average

since about 1995. Indeed, as part of that cycle, the number of

hurricanes forecast for 2005 is twice that of normal. So as many

hurricanes may follow Katrina as preceded it, before the hurricane

season ends on November 30.

But even if the 2005 hurricane season

is part of a natural cycle, it is characterized by a number of

exceptional events. The year 2005 marks the first time there

have been four named storms as early as July 9th since record

keeping began in 1851. (Tropical storms get names when their

wind speeds exceed 62 kilometers (39 miles) per hour.) The 26

named storms as of the end of the 2005 hurricane season exceed

the previous record number of 22, and the naming system, for

the first time, has had to extend into the use of Greek letters

as hurricane designates. In addition, the year 2005 also marked

the earliest Category 4 hurricane (Hurricane Dennis) on record.

The 14 tropical storms whose wind speeds achieved hurricane status

are also an annual record (the previous record was 12). Finally,

Hurricane Wilma brought more than 162 centimeters (64 inches)

of rain to Isla Mujeres, an all-time 24-hour rain record for

the country of Mexico. (Though 2005 is likely to prove to be

an exceptional year for hurricanes, it may nonetheless be part

of a general trend to more numerous and ferocious hurricanes.

The real significance of the 2005 hurricane season will only

be clear from the perspective of a decade or more hence.)

Is any of this unusual activity attributable

to global warming?

One of the predictions by those who

study global warming is that the number and severity of unusual

precipitation events will increase as the planet warms. Hurricanes

are unusual precipitation events. Although it is too soon to

assess whether the exceptional number of hurricanes predicted

for 2005 and thereafter are truly part of a natural cycle, or

whether they are some of the early effects of global warming,

it may not be premature to suggest that the general severity

of hurricanes is indeed increasing due to that warming. Hurricanes,

after all, are colossal heat pumps, transporting vast quantities

of tropical heat to more temperate regions.

Intensity of precipitation

events and temperature

The data on

this bar graph comes from 100 weather stations worldwide. Some

stations (51 of them) are represented in blue; these are stations

where temperatures range from 3°C to 19°C (26.6°F

to 66.2°F). Other stations (37 of them) have temperatures

in the 19°C to 29°C (66.2°F to 84.2°F) range;

these are shown in pink. The hottest stations (12), with temperatures

between 29°C and 35°C (84.2°F to 95°F) are indicated

in red. All stations have an average of about 230 millimeters

(about nine inches) of seasonal precipitation.

The daily precipitation at these stations has been divided according

to amounts in tens of millimeters (each millimeter = 1/25th of

an inch). Thus, the first set of bar graphs on the left shows

how much of the total precipitation (of the 230 millimeters or

nine inches) falls in events where the amount is about 10 mm

(about 0.4 inch). For the coolest stations (blue), over 30% of

total precipitation falls in such events. But for the warmest

stations (red), less than 20% falls in these limited precipitation

events.

Now look at the right side of the graph. Here is the symbol >100,

meaning that these are precipitation events where more than (>)

100 millimeters falls in a day. For the coolest stations (blue),

only a percent or two of total precipitation falls in such events.

But for the warmest stations (red), a much higher percentage

(4-5%) of total precipitation falls in such precipitation events.

The message of the graph is this: that in warmer conditions,

even with the same total amount of precipitation falling, precipitation

tends to be concentrated into bigger precipitation events. In

a warmer world, therefore, we ought to expect more severe precipitation

events, even if the total amount of precipitation remains the

same. But, of course, a warmer atmosphere will hold more moisture,

and the severity of extreme precipitation events will be even

greater (Karl and Trenberth, 2003).

A new computer model

of projected extreme weather events for central North America

during the next century seems to confirm these forecasts. Using

five months worth of supercomputer time and enormous amounts

of data, the resulting maps show in great detail the anticipated

changes in extremely hot and cold events, as well as in extreme

precipitation events. While the number of extremely cold events

drops significantly, as would be expected in a warmer world,

both the number of extremely hot events and the number of extremely

wet events increases, in some cases quite significantly. For

example, the desert Southwest will experience more than five

times as many heat waves, and they will be of as much as five

times longer duration than those of today. The northeastern US

will experience heat waves typical of the summer's two hottest

weeks -- but extending over two months. Even those parts of the

US which usually have the fewest extremely hot events will have

twice as many. The Gulf Coast, battered by hurricanes in the

past few years, will not only have more heat waves, but also

more rain and stronger precipitation events. Assuming that the

model's projections can be extended to the nearby Gulf waters,

that is a prescription for more, and more intense, hurricanes.

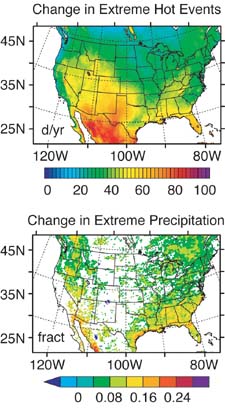

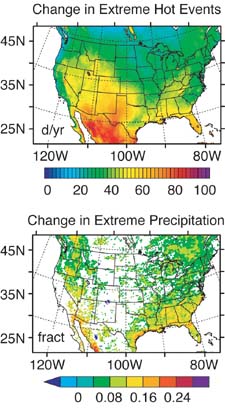

Extreme weather

event changes in central North Ameica over the century. The upper map shows projected

changes in the number of extremely hot days. Some parts of the

Southwest will have almost three months more heat waves than

at present. The lower map indicates the projected increase in

extreme precipitation events, as a fraction of today's extreme

precipitation events. (Thus, 0.08 represents an 8% increase in

extreme precipitation events; 0.16, a 16% increase, and 0.24.

a 24% increase.) Worst hit will be the Gulf Coast (Diffenbaugh,

2005; Boutin, 2005).

Extreme weather

event changes in central North Ameica over the century. The upper map shows projected

changes in the number of extremely hot days. Some parts of the

Southwest will have almost three months more heat waves than

at present. The lower map indicates the projected increase in

extreme precipitation events, as a fraction of today's extreme

precipitation events. (Thus, 0.08 represents an 8% increase in

extreme precipitation events; 0.16, a 16% increase, and 0.24.

a 24% increase.) Worst hit will be the Gulf Coast (Diffenbaugh,

2005; Boutin, 2005).

That the general severity of hurricanes

may be increasing is the conclusion of a study published early

the same month as Hurricane Katrina ravaged New Orleans. (Hurricane

Katrina was not an exceptionally severe hurricane, though it

was exceptionally large and struck in a particularly vulnerable

and inadequately prepared area. However, a later hurricane of

the 2005 season, Wilma, briefly became the most intense Atlantic

hurricane ever recorded. It went from a tropical storm, with

maximum winds of 119 kilometers/74 miles per hour to a category

5 hurricane, with winds over 249 km/155 mph, in a single day.)

According to the study (Emanuel, 2005a), the severity of hurricanes,

as measured by wind speeds and duration, has increased by some

50% over the course of the last 30 years. (This study found no

evidence for an increase in the number of hurricanes.) Things

could be worse: the same study indicates that storms in the northern

Pacific have increased in strength by 75% during the same period

of time. Though this study has its critics (see Landsea, 2005,

and Pielke, 2005), its author stands by his conclusions, noting

that they are based on about 100 times more data than those of

his critics, rather than just hurricane wind speeds at landfall

(Emanuel, 2005b).

A second study confirms that the percentage

of intense hurricanes has increased over the past 35 years, even

while the total number of hurricanes, and their maximum wind

speeds (at about 290 kilometers per hour/180 mph), has remained

roughly the same. In those 35 years, the number of the most intense

hurricanes (categories 4 and 5), has almost doubled, and that

number, as a percentage of all hurricanes, has risen from about

20% to about 35%. The same period has seen a decline in the number

and percentage of weaker (categories 1, 2, and 3) hurricanes

(Webster, 2005).

|

|

Hurricane intensity, 1970-2004. This graph shows the changes in hurricane intensity

for all oceans (in some parts of the world, hurricanes are known

as cyclones or typhoons). The yearly data has been grouped into

"pentads," that is, groups of five years. This is a

statistical method used to reduce the influence of exceptional

years on the results, and to make general trends more visible

(Webster, 2005). |

Some scientists challenge these conclusions.

But as scientists, it is their duty to demonstrate that the conclusions

do not follow from the evidence, or that there is contravening

evidence. At this point, the conclusions stand.

And there may be good reason that they

should. The increase in intensity of major storms over recent

decades seems closely tied to the increase of ocean surface temperature

during the same time. According to Kerry Emanuel, the lead author

of the study, "'The total energy dissipated by hurricanes

turns out to be well correlated with tropical sea surface temperatures.

The large upswing in the past decade is unprecedented and probably

reflects the effects of global warming'" (quoted in Verrengia,

2005). Storm intensity is measured by wind speeds and storm duration.

[It should be noted that both the hurricane intensity study and

Emanuel's comments date from several weeks before Hurricane Katrina

struck.] The strong link between hurricane intensities and sea

surface temperatures (as opposed to other possible influential

factors) received additional confirmation in a study published

in March of 2006. The link was found in all oceans that have

such storms (Hoyos, 2006).

Over the past 40 years, the upper ocean

(down to about 300 meters/yards) has warmed -- as a result of

the heating of the atmosphere by greenhouse gases -- by an average

of 0.5°C (0.9°F: Barnett, 2005). This warming is present

in all oceans to a greater or lesser degree, according to independent

studies with millions of data points (Levitus, 2000; Levitus,

2001; Levitus, 2005).

Warmer oceans contain more heat, which

drives hurricanes; they also evaporate more water, adding to

the severity of precipitation events. So there is a specific

cause and effect relationship between oceanic warming and hurricane

intensity. Consequently, it would be surprising if hurricane

intensity had not increased as a result of oceanic warming. One

would have to wonder why the predicted effect had not occurred

when the causative agent was present. It would be rather like

dropping a stone, only to have it float in the air.

So, did global warming specifically

contribute to the size and ferocity of Hurricane Katrina?

Most likely, yes. Both the North Atlantic,

in which Katrina formed, and the Gulf of Mexico, where it grew

to a major hurricane, have been warmed by the action of anthropogenic

greenhouse gases. The Gulf of Mexico, for example, was 2°C

to 3°C (3.6°F to 5.4°F) warmer in early August than

is usual for that time of year (Schiermeier, 2005c); seawater

temperatures there were unprecedented. This allowed Katrina to

suck "so much heat from the gulf that water temperatures

dropped dramatically after it had passed, in some regions from

30°C to 26°C" [86°F to 78.8°F] (Schiermeier,

2005c).

Both Katrina and the next major hurricane

to hit the US mainland in the 2005 hurricane season, Rita, seem

to have drawn their increased strength while passing over a "loop

current" or its giant eddies in the Gulf of Mexico. This

current carries warm water from the Caribbean through the Yucatan

Channel (the passage between the Yucatan Peninsula and western

Cuba), into the Gulf, and then out into the Atlantic via the

Straits of Florida (between Florida and northern Cuba). The loop

current and its eddies provide a deep (to water depths of 100

meters/yards or so) source of heat upon which passing tropical

storms or weaker hurricanes can build into major hurricanes (Revkin,

2005).

Sea Surface Temperatures

on August 27, 2005, as Hurricane Katrina approached the Gulf

Coast. Hurricanes require minimum

temperatures of about 26°C (78.8°F) in order to form.

Sea surface temperatures can only provide a general indication

of hurricane building potential, however, because hurricanes

draw their strength from waters as deep as many tens of meters/yards

(NOAA).

Sea Surface Temperatures

on August 27, 2005, as Hurricane Katrina approached the Gulf

Coast. Hurricanes require minimum

temperatures of about 26°C (78.8°F) in order to form.

Sea surface temperatures can only provide a general indication

of hurricane building potential, however, because hurricanes

draw their strength from waters as deep as many tens of meters/yards

(NOAA).

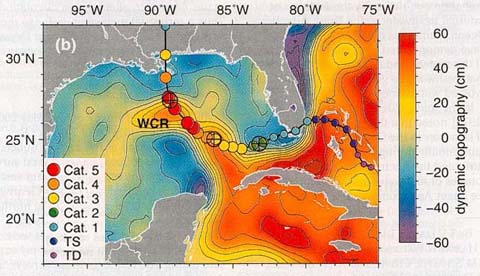

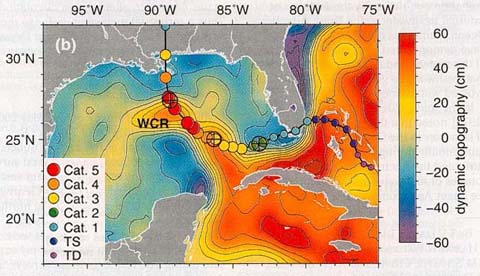

Deeper water temperatures

and Katrina. Although rising

sea surface temperatures are generally correlated with increased

hurricane intensity, hurricanes draw their strength from more

than just the surface of the ocean. Deeper ocean warmth is required.

This satellite image shows why Katrina evolved into a major hurricane

(and also why it lost strength just before reaching the Gulf

Coast). Instead of showing sea surface temperatures, it displays

the relative height of the ocean surface. Reds and yellows indicate

a somewhat greater height to the sea surface, as measured in

centimeters (2/5 of an inch). Because water expands as it warms,

a higher sea surface indicates that the water below is generally

warmer than in areas where the sea surface is lower (and the

water below is therefore cooler). The image clearly shows the

loop current which swings out of the Caribbean to the south,

into the Gulf of Mexico west of Cuba, and then moves east between

Cuba and Florida. A large blob of warm water, called a warm-core

ring (WCR) has spun off the loop current. Katrina's path (from

right to left in this image) took it over the loop current, and

then over the warm-core ring, which enormously strengthened the

storm's intensity. (TS = tropical depression; TS = tropical storm;

please disregard the larger circles with crosshairs, which refer